In recent months, the development of Multimodal Language Models (MLLMs) has brought significant advancements to the field of natural language processing. These models have allowed for improved communication and understanding between humans and Large Language Models (LLMs). However, a challenge remains when it comes to communicating the exact locations of visual content. While MLLMs can comprehend the visual aspects, conveying specific positions within an image has proven to be a complex task for both users and models. This is where Shikra, a groundbreaking MLLM developed by Chinese researchers from SenseTime Research, SKLSDE, Beihang University, and Shanghai Jiao Tong University, comes into play.

Applications of Multimodal Language Models

Multimodal Language Models have opened up a realm of possibilities in various applications. These models have enabled users to discuss and describe input images, facilitating better understanding and communication. However, a limitation arises when users need to convey precise locations within the visual content. Humans naturally refer to distinct areas or items in a scene during everyday conversations and can effectively share information by talking and pointing to specific regions. To address this limitation, the concept of Referential Dialogue (RD) has emerged, aiming to enable MLLMs to handle spatial coordinates and engage in effective information sharing.

Introducing Shikra: A Unified Model for Spatial Coordinates

Shikra, developed by a collaborative team of Chinese researchers, is a unified model designed to handle inputs and outputs of spatial coordinates in natural language. The development of Shikra involved extensive efforts in creating a model that could seamlessly integrate visual and textual information without the need for additional vocabularies or position encoders. Instead, Shikra represents all coordinates, both input and output, in a numerical form embedded within natural language. This approach simplifies the architecture and ensures a straightforward and user-friendly experience without the need for pre-/post-detection modules or additional plug-in models.

Components of Shikra Architecture

Shikra’s architecture comprises three essential components: an alignment layer, a Large Language Model (LLM), and a vision encoder. The alignment layer facilitates the integration of multimodal inputs by aligning visual and textual information. The Large Language Model serves as the contextual understanding engine, enabling Shikra to comprehend and generate responses in a natural language format. The vision encoder plays a crucial role in processing the visual content, extracting relevant features, and facilitating a comprehensive understanding of the input image.

User Interactions and Capabilities of Shikra

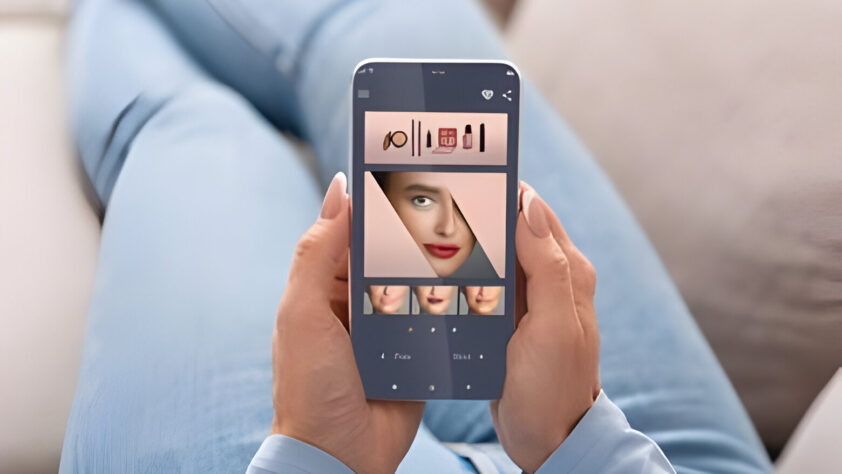

Shikra empowers users with referential dialogue capabilities, revolutionizing the way they interact with AI assistants and visual content. Users can now indicate specific areas or items within an image, facilitating effective communication with the AI assistant. This becomes particularly useful when utilizing Mixed Reality (XR) headsets, such as the Apple Vision Pro, where Shikra can provide immediate visual feedback aligned with the user’s field of vision. Moreover, Shikra’s capabilities extend to enhancing interactions with visual robots, allowing them to understand and respond to unique reference points, resulting in more efficient and engaging human-robot interactions. Additionally, Shikra aids consumers in online buying by providing detailed information about objects of interest within an image.

Shikra’s Versatility in Visual Language Tasks

Shikra’s proficiency in Referential Dialogue goes beyond facilitating effective information sharing. It also demonstrates promising results in various visual language tasks. These tasks include Visual Question Answering (VQA), where Shikra can comprehend questions related to visual content and provide accurate answers. Furthermore, Shikra excels in picture captioning, generating descriptive captions that accurately reflect the content of the input image. Additionally, Shikra’s capabilities extend to tasks such as Referring Expression Comprehension (REC) and pointing, where it can understand and respond to queries regarding specific objects or regions within an image.

Exploring Location Representation in Images

One intriguing aspect of Shikra’s development involves exploring how to represent location within images. The researchers behind Shikra, based in China, aim to understand whether MLLMs can comprehend absolute positions and whether incorporating geographical information in reasoning can lead to more precise responses to user queries. These analytical experiments provide valuable insights and may spur future research in the field of Multimodal Language Models.

Conclusion

The development of Shikra, a Chinese contribution, marks a significant milestone in the field of Multimodal Language Models, enabling enhanced communication and understanding between humans and AI systems regarding spatial coordinates. Shikra’s ability to handle referential dialogue and its versatility in visual language tasks hold great promise for numerous applications. By exploring the representation of location in images and incorporating geographical information in reasoning, Shikra and the associated research open doors to further advancements in the field. Researchers and developers can now leverage Shikra’s code, available on GitHub, to continue the pursuit of more efficient and intuitive human-AI interactions.